In our line of work at Arkieva, when we ask this question of business folks: What is your forecast accuracy? Depending on who we ask in the same business, we can get a full range of answers from 50% (or lower) to 95% (or higher). How is this possible? Imagine a management team being given this range of numbers on the same metric. I am sure they will not be happy. In this blog post, we will consider this question and suggest ways to report the accuracy so management gets a realistic picture of this important metric.

Metrics for Measuring Demand Planning Accuracy

Forecasting and demand planning teams measure forecast accuracy as a matter of fact. There are many standards and some not-so-standard, formulas companies use to determine the forecast accuracy and/or error. Some commonly used metrics include:

- Mean Absolute Deviation (MAD) = ABS (Actual – Forecast)

- Mean Absolute Percent Error (MAPE) = 100 * (ABS (Actual – Forecast)/Actual)

- Bias (This will be discussed in a future post: Updated Links for bias: 1, 2)

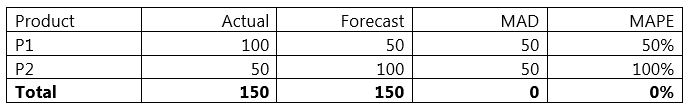

All these metrics work great at the level at which they are being calculated. For example, if a business has 10,000 SKU – Customer combinations, these metrics can be used to calculate the error of each individual combination. However, when there is a need to calculate the metric at an aggregate level, the negative errors and the positive errors cancel each other and you get a picture that is much rosier than the reality. Let us look at an example to understand this:

As is evident from this example, both the MAD and MAPE formulas when calculated at the total level, give a zero error! Nothing could be further from the truth. If any operations folks were using this forecast to plan their operations, they would get very unhappy if they heard the error was zero. Because, as far as they are concerned, the error is very high at the level at which it matters to them.

If we wanted to report this error to management, what number should be reported to them? Here are some suggestions:

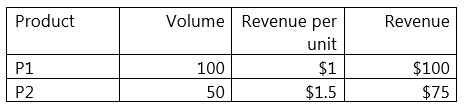

1. Calculate the error at the low level. Then weight the results based on some other contributing factor, such as, revenue or volume (units). Let us see what this looks like for the given example. Assume the following numbers for the two products in question here:

Equally weighted forecast error (or simple average)

= AVERAGE (MAPE-P1, MAPE-P2)

= AVERAGE (50%, 100%)

= 75%

Revenue-weighted forecast error

= (R-P1 * MAPE-P1+ R-P2 * MAPE-P2) / (R-P1+R-P2)

= (100 * 50% + 75*100%) / (100 + 75)

= 125 / 175

= 71.43%

Volume weighted forecast error

= (V-P1 * MAPE-P1+ V-P2 * MAPE-P2) / (V-P1+V-P2)

= (100 * 50% + 50*100%) / (100 + 50)

= 100 / 150

= 66.67%

As one can see, weighting the error by different things produces different results. However, all three of the options are better at depicting the true picture as compared to simply calculating the error at the high level, which resulted in 0% error!

As far as a recommended way for reporting the forecast error to the management, we suggest weighing it by volume, if you were forecasting volume or by revenue if you were forecasting revenue etc. If this advice is followed, then the formula also becomes easy to calculate. For example, after some algebraic gymnastics, the formula for volume weighted MAPE becomes:

VWMAPE = SUM of Absolute errors / Sum of Volumes (assuming one was forecasting volumes).

In this example, VWMAPE

= SUM (50, 50) / SUM (100, 50)

= 100 / 150

= 66.67%, which matches the value above.

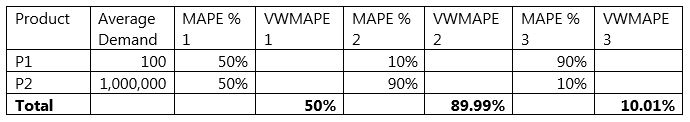

The main advantage of using a weighing scheme is that it allows a user to put higher emphasis on items where the volume (or revenue is high). A 10% error on an item with an average demand of 100 units gets much less importance as compared to a 10% error on an item with an average demand of 1 million units. This is as it should be. This is clear from the example in the table below.

The volume weighted MAPE is one of the recommended metrics when it comes to reporting forecast error to the management. This provides a more realistic picture of the forecast error (or accuracy) within the forecasting process at the aggregate level, which is all that the management team can realistically monitor given their time constraints.

Do you report forecast accuracy to your management? If so, I am interested in hearing from you.

Like this blog? Follow us on LinkedIn or Twitter and we will send you notifications on all future blogs.